-

Continue reading →: AI Is Not Replacing Jobs, It’s Replacing the Work Inside Them

Continue reading →: AI Is Not Replacing Jobs, It’s Replacing the Work Inside ThemThere has been a lot of discussion recently around Mustafa Suleyman’s statement that most white-collar tasks could be automated within the next 12–18 months. A large part of the reaction has been skeptical, often dismissing the claim that AI will not replace knowledge workers in that timeframe. Whole Interview: https://youtu.be/YTrBz6Z5c0E?si=RAEqEXtu4ltHZkut…

-

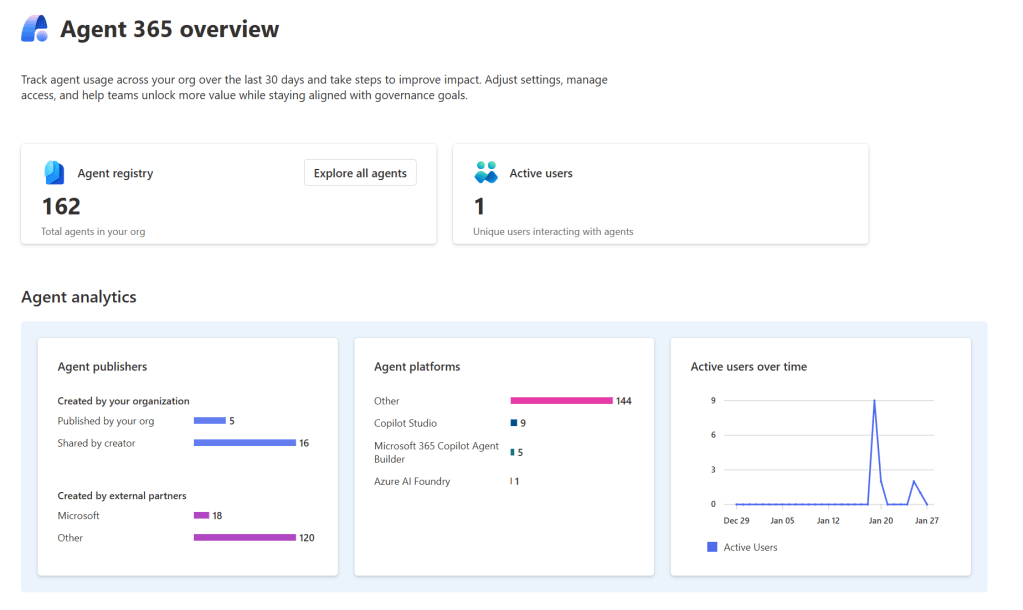

Continue reading →: AI Agent Governance Is Changing – What Power Platform Admins Must Know?

Continue reading →: AI Agent Governance Is Changing – What Power Platform Admins Must Know?Last week, I had a session in M365 Show about a topic that many Power Platform admins are only beginning to recognize in their daily work: the governance model we have relied on for years no longer fully applies to AI agents. Scaling AI Agents Securely: Next-Level Governance for Power…

-

Continue reading →: Teaching Agents to Work: How to Design a Copilot Agent?

Continue reading →: Teaching Agents to Work: How to Design a Copilot Agent?Although I might be focusing on agents and Copilot Studio governance in general, I also work on the building side. Most importantly, I discuss, listen to, and teach agents and their possibilities as part of my everyday work. During my studies, my major was information systems, not software technology. I’ve…

-

Continue reading →: Sync Documents to Copilot Studio Agent

Suppose you plan to use SharePoint or OneDrive documents as a knowledge source for your Copilot Studio agent. In that case, there is now a better way to bring those documents to the agents than linking through the traditional connector or uploading. The new document sync feature will keep your…

-

Continue reading →: Guide to SharePoint Channel in Copilot Studio

SharePoint and an agent are perhaps the most used combination and something that many of you are building when creating your first Copilot agents. One constant request we hear is to publish the Copilot Agent to a SharePoint site. Earlier, this has only been possible by embedding the agent to…

Hello,

I’m Mikko

I am a Principal Consultant for Copilot and Power Platform at Sulava. My primary work involves Copilot Studio, Copilot Agents, Power Platform, SharePoint, and Office 365 solutions. I usually act as a system and solution architect or lead consultant in my projects and participate in customer offer processes.