There has been a lot of discussion recently around Mustafa Suleyman’s statement that most white-collar tasks could be automated within the next 12–18 months. A large part of the reaction has been skeptical, often dismissing the claim that AI will not replace knowledge workers in that timeframe.

Whole Interview: https://youtu.be/YTrBz6Z5c0E?si=RAEqEXtu4ltHZkut

That may well be true. But it is also beside the point.

The statement is not really about jobs. It is about tasks. And if you look at it from that perspective, the argument becomes much harder to dismiss.

In most organizations, work is still discussed at the level of roles. But AI does not operate at that level. It operates at the level of tasks, and that is exactly where the change is happening.

Most knowledge work is still execution

When people talk about white-collar work, they tend to focus on expertise, decision-making, and problem-solving. In reality, a large share of time is still spent on execution.

If you look at how work actually happens, it includes a lot of:

- drafting documents and reports

- summarizing and interpreting information

- preparing presentations

- structuring material

- producing first versions of deliverables

These tasks are not the most visible part of the work, but they take up a significant amount of time. And they are exactly the areas where AI is already delivering value.

We are already at a point where systems can produce solid first drafts, process large amounts of information, and structure outputs in a way that is immediately usable. Not perfect, but good enough to change the starting point.

And once the starting point changes, the rest of the work changes with it.

In practice, this means:

- The first version is no longer created from scratch

- The effort shifts from production to refining

- The bottleneck moves away from execution

That shift is already happening. The question is not whether it will happen, but how fast organizations adapt to it.

We have seen this shift before

A useful comparison is software development, where this change is already well advanced. Writing code used to be a largely manual activity. Then the AI helped us write part of the code. Now AI can generate significant parts of it, suggest improvements, and assist throughout the process.

Developers have not disappeared, but their role has changed. Less time is spent writing code line by line, and more time is spent defining what should be built, guiding the system, and validating the outcome.

The work has moved up a level—from execution to intent and verification.

The same pattern is now emerging across knowledge work. You can already see it in how people work:

- Less time writing from scratch

- More time guiding and refining

- More time reviewing outputs

- More time making decisions

And the impact is already visible:

- Work that used to take days now takes hours

- First drafts are available almost instantly

- Analysis is no longer the main bottleneck

Execution is becoming easier and faster, and in many cases increasingly automated.

What becomes scarce is not the ability to produce content. It is the ability to judge what is actually good.

We are arguing about the wrong thing

This is why the discussion about whether AI will replace jobs is not very useful. It frames the change at the wrong level and leads to predictable but ultimately shallow conclusions.

A more relevant set of questions would be:

- How much of your current work is execution?

- What happens when that part becomes largely automated?

- What remains as your core contribution when AI can produce the first 80%?

The 12–18 month timeline may be too aggressive if you think in terms of entire professions. But at the level of tasks, the shift is already happening—and accelerating.

The organizations that benefit from this are not the ones that try to replace people. They are the ones that rethink how work is done.

That usually means redesigning processes, redefining roles, and accepting that the way work has been structured so far is no longer optimal.

The real gap: we don’t know how to work this way yet

There is one part of this transformation that is still under-discussed.

If execution is increasingly handled by AI, then the human role changes. And that requires capabilities that most organizations have not yet built.

In practice, this is where most AI initiatives struggle. Not because technology does not work, but because the way work is defined does not support it.

Two areas stand out.

If you cannot describe the work, you cannot automate it

AI systems do not work well with vague instructions. They require structure. They require clarity. And they require a level of explicitness that most knowledge work does not currently have.

For an AI or an agent to perform a task, you need to be able to define:

- What is the task actually i

- What inputs it uses

- What the output should look like

- What “good” means

- What constraints apply

In theory, this sounds straightforward. In practice, it is often the hardest part.

A lot of knowledge work is based on tacit knowledge—things people know how to do, but have never fully articulated. It works because humans can fill in the gaps. AI cannot.

So one of the most important capabilities going forward is the ability to make work explicit. To take something that is loosely defined and turn it into something structured and repeatable.

That means:

- breaking work into clear steps

- making assumptions visible

- defining quality in a concrete way

AI can help with this, but it does not remove the need for it.

If you cannot describe the work clearly, you cannot automate it. And in many cases, that is exactly where organizations get stuck.

When AI does the work, humans carry the responsibility

The second gap is less discussed, but just as important.

As execution becomes automated, the human role shifts toward reviewing, validating, and improving the output. That sounds straightforward, but it fundamentally changes what it means to “do the work.”

You are no longer responsible for performing the task. You are responsible for the outcome produced by a system.

That is a different kind of responsibility.

It requires the ability to:

- Recognize when outputs are wrong or misleading

- Understand where AI systems can fail

- Define acceptable levels of accuracy

- Build feedback loops to improve performance

At the organizational level, this becomes even more complex. It is no longer enough that individuals use AI tools effectively. The organization needs to be able to rely on the outputs.

That means:

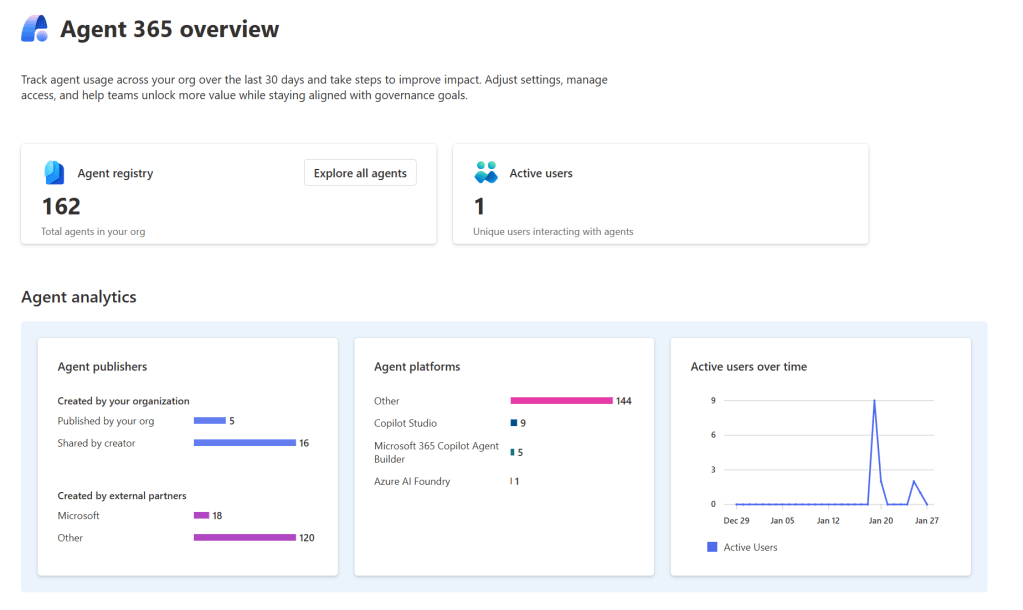

- Defining governance models

- Establishing clear working practices

- Assigning accountability

- And aligning with the regulation

In other words, the focus shifts from doing tasks to managing systems that do the tasks. Most organizations are not set up for that yet.

This is not just about AI—it is about how work changes

It is easy to focus on how capable AI will become. But the bigger shift is not technical. It is structural.

We are moving towards a model where:

- Execution is increasingly automated

- Humans define what needs to be done

- Humans guide the system

- Humans validate the outcome

That is not a small change. It challenges how work is defined, how roles are structured, and how value is created.

And it leads to a more uncomfortable question than “Will AI replace jobs?”

What is your role when most of the execution is no longer yours to do?

That is the question organizations and individuals need to start answering now.

Leave a comment